Homework 06: The Impact of Changes in Network Connectivity

In machine learning, metrics like accuracy and loss are typically used to quantify differences in behavior between neural network architectures. This is quite a bit different from what computational neuroscientists look at to compare models, as well as what experimentalists measure in actual brains. As we will see in this assignment, important insights can be gained about changes in connectivity by monitoring the function of different networks as they process input.

For this assignment, record your responses to the following activities in the

README.md file in the homework06 folder of your assignments GitLab

repository and push your work by 11:59 PM Friday, November 22.

Activity 0: Branching

As discussed in class, each homework assignment must be completed in its own git branch; this will allow you to separate the work of each assignment and for you to use the merge request workflow.

To create a homework06 branch in your local repository, follow the

instructions below:

$ cd path/to/cse-40171-fa19-assignments # Go to assignments repository $ git remote add upstream https://gitlab.com/wscheirer/cse-40171-fa19-assignments # Switch back over to the main class repository $ git fetch upstream # Toggle the upstream branch $ git pull upstream master # Pull the files for homework06 $ git checkout -b homework06 # Create homework06 branch and check it out $ cd homework06 # Go into homework06 folder

Once these commands have been successfully performed, you are now ready to add, commit, and push any work required for this assignment.

Activity 1: Implement a Small Feed-Forward Network (25 Points)

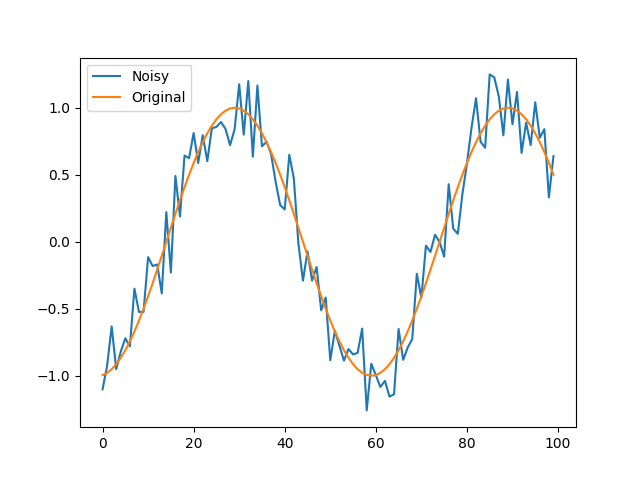

In this assignment, we will compare two different network architectures: one feed-forward, and the other recurrent. We know from class that the nature of the connectivity between units in a network can change its internal behavior — even when the training regime is the same. Here we will look at a specific instance of that difference. To begin, consider the sine.py snippet of code in the homework06 directory you have cloned. The code found in that source file generates a dataset of 10,000 noisy sine waves (8,000 training and 2,000 testing) over 100 different positions, where a trained model's objective is to estimate a denoised signal from each noisy input. For example, here is a noisy wave and its corresponding noise-free signal:

Using Pytorch, add code to the provided snippet to define, train, and test a two-layer feed-forward neural network. Name your source file simpleFeedForward.py. Both layers should be Linear (nn.Linear), and you can use tanh as the activation function. With respect to the hyperparameters, use the following:

- Hidden Layer Size: 30

- Optimizer for Training: Adam

- Learning Rate: lr=1e-2

- Loss Function: L1

- Number of Training Epochs: 300

Print the loss every 20 epochs during training over the set of 8,000 waves. After the model has trained, evaluate the loss on the test set of 2,000 waves (have your program print this value as well). If all goes well, you should achieve a reasonably low loss score on both the training and test sets (i.e., it is possible for the small network to learn this denoising task). Record your loss values for training and testing in your README.md file as part of your answer to this activity.

Activity 2: Implement a Small Recurrent Network (25 Points)

Now let's change the connectivity of the feed-forward network to make it recurrent. Copy your code for the feed-forward network to a new source filed named simpleRNN.py. Replace the first Linear layer with a recurrent layer (nn.RNN). You can use the same hyperparameters that were specified in Activity 1.

As you did in Activity 1, print the loss every 20 epochs during training over the set of 8,000 waves. After the model has trained, evaluate the loss on the test set of 2,000 waves (have your program print this value as well). If all goes well, you should achieve a reasonably low loss score on both the training and test sets (i.e., it is possible for the small recurrent network to learn this denoising task). Record your loss values for training and testing in your README.md file as part of your answer to this question.

Question: which network performs better on this denoising task based on the loss values? Record the answer to this question in your README.md file.

Activity 3: Record the Activations from the Two Networks (25 Points)

With an understanding of the empirical performance of the networks based on loss, let's now look at another aspect of their behavior — one that might provide more insight into the nature of the functions learned by the networks. Add some code to the source file of each network to record the activations from the application of the activation function over the last Linear layer of each network. This is akin to a neuroscientist recording the responses from a collection of neurons that are responsible for processing some input.

To compare the two networks, we'll need the same test data to put them on a common basis. Download this binary dump of an out-of-dataset (i.e., different from the data you generate from run-to-run with the provided dataset generation code) test set of Pytorch tensors. In your source code for each network, load this out-of-dataset test data and then compute the loss for it after running it through the trained networks to make predictions. You can add this code directly after the place where you compute the training loss and within-dataset test loss. Record this test loss value for each network in your README.md file as part of your answer to this activity. Do not be alarmed if these loss values are very high — this data is drawn from a different distribution compared to what you trained with in both cases.

Hint: there are a few strategies for recording activations in PyTorch. Feel free to do some external research to figure this part out. Here is an organizational strategy to use after the activations are accessible: make a dictionary that saves the activations as they come in, and then concatenate all of those saved outputs to get the activation pattern for the target layer over the entire dataset.

Activity 4: Compare the Activations from the Two Networks (25 Points)

Use matplotlib to plot the activations from the last layer of the feed-forward network and the recurrent network for the first five test samples from the out-of-dataset test data (the data you loaded externally in Activity 3). The x-axis should reflect the position on the sine wave (recall that each data sample has 100 positions), while the x-axis should reflect the activation value at each position. Save these plots and include them in your README.md file. The activation patterns for each network should look very different. Why do you suppose that is the case? Record your answer to this question in your README.md file.

Feedback

If you have any questions, comments, or concerns regarding the course, please

provide your feedback at the end of your README.md.

Submission

To submit your assignment, please commit your work to the homework06 folder

of your homework06 branch in your assignment's GitLab repository:

$ cd path/to/cse-40171-fa19-assignments # Go to assignments repository $ git checkout master # Make sure we are in master branch $ git pull --rebase # Make sure we are up-to-date with GitLab $ git checkout -b homework06 # Create homework06 branch and check it out $ cd homework06 # Go to homework06 directory ... $ $EDITOR README.md # Edit appropriate README.md $ git add README.md # Mark changes for commit $ git commit -m "homework06: complete" # Record changes ... $ git push -u origin homework06 # Push branch to GitLab

Procedure for submitting your work: create a merge request by the process that is described here, but make sure to change the target branch from wscheirer/cse-40567-sp19-assignments to your personal fork's master branch so that your code is not visible to other students. Additionally, assign this merge request to our TA (sabraha2) and add wscheirer as an approver (so all class staff can track your submission).