Open World Recognition

Summer 2011 - Present

Description

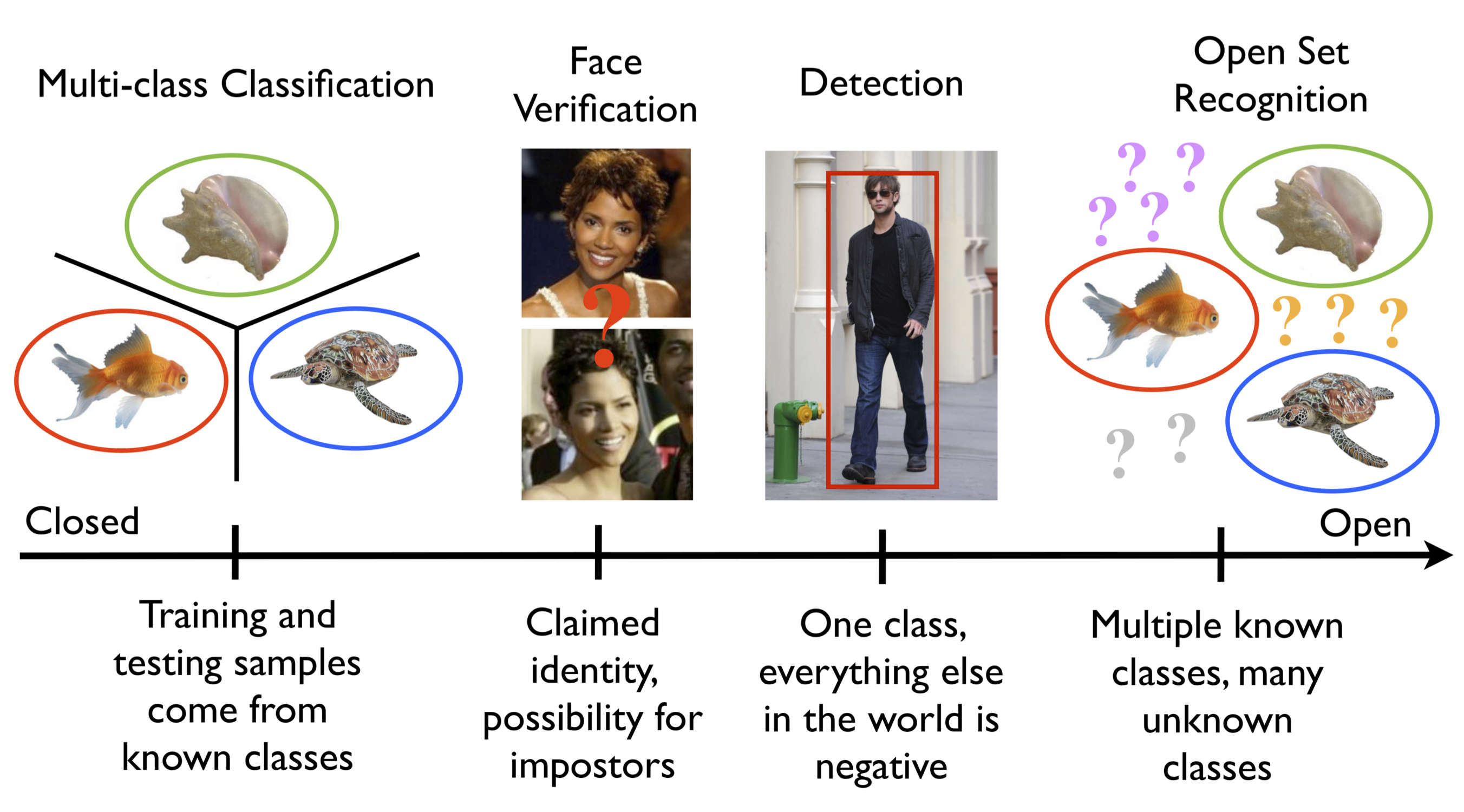

Both recognition and classification are common terms in computer vision. What is the difference? In classification, one assumes there is a given set of classes between which we must discriminate. For recognition, we assume there are some classes we can recognize in a much larger space of things we do not recognize. A motivating question for our work here is: What is the general object recognition problem? This question, of course, is a central theme in vision. How one should approach multi-class recognition is still an open issue. Should it be performed as a series of binary classifications, or by detection, where a search is performed for each of the possible classes? What happens when some classes are ill-sampled, not sampled at all or undefined?

The general term recognition suggests that the representation can handle different patterns often defined by discriminating features. It also suggests that the patterns to be recognized will be in general settings, visually mixed with many classes. For some problems, however, we do not need, and often cannot have, knowledge of the entire set of possible classes. For instance, in a recognition application for biologists, a single species of fish might be of interest. However, the classifier must consider the set of all other possible objects in relevant settings as potential negatives. Similarly, verification problems for security-oriented face matching constrain the target of interest to a single claimed identity, while considering the set of all other possible people as potential impostors. In addressing general object recognition, there is a finite set of known objects in myriad unknown objects, combinations and configurations - labeling something new, novel or unknown should always be a valid outcome. This leads to what is sometimes called "open set" recognition, in comparison to systems that make closed world assumptions or use "closed set" evaluation.

This work explores the nature of open set recognition, and formalizes its definition as a constrained minimization problem. The open set recognition problem is not well addressed by existing algorithms because it requires strong generalization. As steps towards a solution, we introduce the novel "1-vs-Set Machine", "W-SVM" and "EVM" learning formulations. The overall methodology applies to several different applications in computer vision where open set recognition is a challenging problem, including object recognition and face verification. Subsequent work has developed novel loss formulations that take into consideration the human perception of novelty, as well as a theoretical framework for studying novelty in visual recognition. Current work is developing more comprehensive open world learning formulations, which involve not just detecting novelty, but characterizing it and incorporating it back into a trained model. These latter explorations have included work on human activity recognition and handwritten document transcription.

This work was supported by ONR MURI Award No. N00014-08-1-0638, NSF IIS-1320956, FAPESP 2010/05647-4, Army SBIR W15P7T-12-C-A210, DARPA and the Army Research Office under contract HR001120C0055, and Microsoft

Publications

- "Open Issues in Open World Learning,",,,,AAAI AI Magazine,April 2025.

- "Human Activity Recognition in an Open World,",,,,,,

,,,Journal of Artificial Intelligence Research,December 2024.[pdf] [code][bibtex]@article{PrijateljJAIR2024,

author = {Prijatelj, Derek S. and

Grieggs, Samuel and

Huang, Jin andi

Du, Dawei and

Shringi, Ameya and

Funk, Christopher and

Kaufman, Adam and

Robertson, Eric and

Scheirer, Walter J.},

title = {Human Activity Recognition in an Open World},

journal = {Journal of AI Research},

volume = {81},

pages = {935--971},

year = {2024}

}

- "Measuring Human Perception to Improve Open Set Recognition,",,,,IEEE Transactions on Pattern Analysis and Machine Intelligence (T-PAMI),September 2023.[pdf] [supp. material] [code] [data][bibtex]@article{huang2023measuring,

title={Measuring Human Perception to Improve Open Set Recognition},

author={Huang, Jin and Prijatelj, Derek and Dulay, Justin and Scheirer, Walter},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

volume={45},

number={9},

pages={11382--11389},

year={2023},

publisher={IEEE}

}

- "Handwriting Recognition with Novelty,",,,,,International Conference on Document Analysis and Recognition (ICDAR),September 2021.[pdf][bibtex]@inproceedings{Prijatelj_ICDAR2021,

author = {Derek Prijatelj and

Samuel Grieggs and

Futoshi Yumoto and

Eric Robertson and

Walter J. Scheirer},

title = {Handwriting Recognition with Novelty},

booktitle = {International Conference on Document Analysis and Recognition (ICDAR},

year = {2021}

}

- "Towards a Unifying Framework for Formal Theories of Novelty,",,,,,

,,,,,

,,,,AAAI Conference on Artificial Intelligence (AAAI 2021), Senior Member Track,February 2021.[pdf][bibtex]@inproceedings{Boult21,

author = {Terrance Boult and

Przemyslaw Grabowicz and

Derek Prijatelj and

Lawrence Holder and

Joshua Alspector and

Mohsen Jafarzadeh and

Touqeer Ahmad and

Akshay Dhamija and

Chunchun Li and

Steve Cruz and

Abhinav Shrivastava and

Carl Vondrick and

Walter J. Scheirer},

title = {Towards a Unifying Framework for Formal Theories of Novelty},

booktitle = {AAAI Conference on Artificial Intelligence (AAAI 2021)},

year = {2021},

}

- "Gesture-based User Identity Verification as an Open Set Problem for Smartphones,",,IAPR International Conference On Biometrics,June 2019.

- "Learning and the Unknown: Surveying Steps Toward Open World Recognition,",,,,,

,AAAI Conference on Artificial Intelligence (AAAI 2019), Senior Member Track,January 2019.[pdf][bibtex]@inproceedings{Boult18,

author = {Terrance Boult and

Akshay Dhamija and

Manuel Gunther and

James Henrydoss and

Walter J. Scheirer},

title = {Learning and the Unknown: Surveying Steps Toward Open World Recognition},

booktitle = {AAAI Conference on Artificial Intelligence (AAAI 2019)},

year = {2019},

}

- "The Limits and Potentials of Deep Learning for Robotics,", , , , ,

, , , , ,

,International Journal of Robotics Research,April 2018.[pdf][bibtex]@article{Sunderhauf2018,

author = {Niko Sunderhauf and

Oliver Brock and

Walter J. Scheirer and

Raia Hadsell and

Dieter Fox and

Jurgen Leitner and

Ben Upcroft and

Pieter Abbeel and

Wolfram Burgard and

Michael Milford and

Peter Corke},

title = {The Limits and Potentials of Deep Learning for Robotics},

journal = {International Journal of Robotics Research},

volume = {37},

number = {4-5},

year = {2018}

}

- "The Extreme Value Machine,", , , ,IEEE Transactions on Pattern Analysis and Machine Intelligence (T-PAMI),March 2018.[pdf] [supp. material] [code][bibtex]@article{Rudd_2018_TPAMI,

author = {Ethan Rudd and Lalit P. Jain and Walter J. Scheirer and Terrance Boult},

title = {The Extreme Value Machine},

journal = {IEEE Transactions on Pattern Analysis and Machine Intelligence (T-PAMI)},

volume = {40},

number = {3},

month = {March},

year = {2018}

}

- "Open Set Fingerprint Spoof Detection Across Novel Fabrication Materials,", , ,IEEE Transactions on Information Forensics and Security (T-IFS),November 2015.[pdf] [supp. material][bibtex]@article{Rattani_2015_TIFS,

author = {Ajita Rattani and Walter J. Scheirer and Arun Ross},

title = {Open Set Fingerprint Spoof Detection Across Novel Fabrication Materials},

journal = {IEEE Transactions on Information Forensics and Security (T-IFS)},

volume = {10},

issue = {11},

month = {November},

year = {2015}

}

- "Probability Models for Open Set Recognition,", , ,IEEE Transactions on Pattern Analysis and Machine Intelligence (T-PAMI),November 2014.[pdf] [supp. material] [errata][bibtex]@article{Scheirer_2014_TPAMIb,

author = {Walter J. Scheirer and Lalit P. Jain and Terrance E. Boult},

title = {Probability Models for Open Set Recognition},

journal = {IEEE Transactions on Pattern Analysis and Machine Intelligence (T-PAMI)},

volume = {36},

issue = {11},

month = {November},

year = {2014}

}

- "Multi-Class Open Set Recognition Using Probability of Inclusion,", , ,Proceedings of the European Conference on Computer Vision (ECCV),September 2014.[pdf] [supp. material][bibtex]@InProceedings{Jain_2014_ECCV,

author = {Lalit P. Jain and Walter J. Scheirer and Terrance E. Boult},

title = {Multi-Class Open Set Recognition Using Probability of Inclusion},

booktitle = {The European Conference on Computer Vision (ECCV)},

month = {September},

year = {2014}

} - "Open Set Source Camera Attribution and Device Linking,", , , , ,Pattern Recognition Letters (PRL),April 2014.[pdf][bibtex]@article{Costa_2014_PRL,

author = {Filipe O. Costa and Ewerton Silva and Michael Eckmann and Walter J. Scheirer and Anderson Rocha},

title = {Open Set Source Camera Attribution and Device Linking},

journal = {Pattern Recognition Letters},

volume = {36},

month = {April},

year = {2014},

} - "Towards Open Set Recognition,", , , ,IEEE Transactions on Pattern Analysis and Machine Intelligence (T-PAMI),July 2013.[pdf][bibtex]@article{Scheirer_2013_TPAMI,

author = {Walter J. Scheirer and Anderson Rocha and Archana Sapkota and Terrance E. Boult},

title = {Towards Open Set Recognition},

journal = {IEEE Transactions on Pattern Analysis and Machine Intelligence (T-PAMI)},

volume = {35},

issue = {7},

month = {July},

year = {2013}

}

- "Animal Recognition in the Mojave Desert: Vision Tools for Field Biologists," (Best Paper Award), , , , , , , ,Proceedings of the IEEE Workshop on the Applications of Computer Vision (WACV),January 2013.[pdf][bibtex]@article{Wilber_2013_WACV,

author = {Michael Wilber and Walter J. Scheirer and Phil Leitner and Brian Heflin

and James Zott and Daniel Reinke and David Delaney and Terrance E. Boult},

title = {Animal Recognition in the Mojave Desert: Vision Tools for Field Biologists},

booktitle = {Proceedings of the IEEE Workshop on the Applications of Computer Vision (WACV)},

month = {January},

year = {2013}

}

- "Detecting and Classifying Scars, Marks, and Tattoos Found in the Wild,", , ,Proceedings of the IEEE International Conference on Biometrics: Theory, Applications and Systems (BTAS),September 2012.[pdf][bibtex]@InProceedings{Heflin_2012_BTAS,

author = {Brian Heflin and Walter J. Scheirer and Terrance E. Boult},

title = {Detecting and Classifying Scars, Marks, and Tattoos Found in the Wild},

booktitle = {The IEEE International Conference on Biometrics: Theory, Applications and Systems (BTAS)},

month = {September},

year = {2012}

} -

"Open Set Source Camera Attribution," (Best Student Paper Award), , ,Proceedings of XXV SIBGRAPI - Conference on Graphics, Patterns and Images,August 2012.

Code

- The Open World Human Activity Recognition code is available on GitHub

- The MSD-Net with Psychophysical Loss code is available on GitHub

- The Handwriting Recognition With Novelty Agent code is available on GitHub

- The EVM code is available on GitHub

- The W-SVM and PI-SVM code is available on GitHub

- The 1-vs-Set Machine code is available on GitHub

Data Sets

- Features for open set training and testing on partitions of Caltech-256, ImageNet, and Labeled Faces in the Wild

Posters

Press Coverage

- National Wildlife: "Using Mobile Devices in Fieldwork"

- New Scientist: "Smartphones make identifying endangered animals easy"